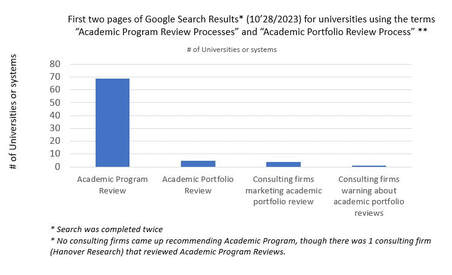

tIntroduction The University of North Carolina Greensboro is currently undergoing an Academic Portfolio Review, which on its web site indicates that the review is synonymous with Academic Program Review. The Chancellor has stated that UNCG's Academic Portfolio Review is a "best practice" (ad nauseum). He also indicated that the type of detailed program reviews that we do partly for accreditation, but mostly for real examination and external evaluation are not as useful as an Academic Portfolio Review. (at least for UNCG right now). There is confusion among faculty at my academic Institution, UNCG. One of our web sites answers the question of "What is Academic Program Review?" with the following "Academic program review: also known as “Academic Portfolio Review: this process is a best practice in higher education. UNCG has not undertaken a comprehensive academic portfolio review in more than 15 years. During this process, the faculty, department chairs, deans, and university staff review the performance of each academic program considering factors such as enrollment and student interest/demand, student success and graduation, student credit hour production, scholarly and community distinction and grant funding among other factors." Several times over the last two years, UNCG leaders have used the terms "research shows" or "best practice" to win support for implementing various actions. However, weblinks to a research paper that supports the desired action are not generally provided, except in one case (mid term grade reports) where a research paper was provided presenting data somewhat irrelevant to the action- the question was whether mid-term grade reports increase student success (very little data to support that ); the paper addressed whether mid-term grades predict final grades. I don't remember seeing any documentation when an action was spun as a "best practice." I am on the autism spectrum. So, I think I may have a harder time letting go when I feel like their is dishonesty, and/or disingenuousness, and/or spin on issues I care about. So, it bugs me when I feel I am being spun. I had lots of experience with academic program reviews during my 25 years of higher ed administration. I don't have any experience with academic portfolio reviews. So, I don't know if they are a best practice, or even how they relate to academic program reviews. Academic program reviews almost always have a faculty-led detailed self-study followed by an external evaluation from disciplinary peers The UNCG academic portfolio review only uses internal data to compare programs/department against each other, with no use of external review or comparison against peer departmental/program data and does not draw on previous departmental/program reviews completed in the campus.. This confused me. So, I tried to empirically determine the following about academic program reviews and academic portfolio reviews: 1) Are academic program and academic portfolio reviews the same thing as UNCG claims on its web site?; 2) Are academic portfolio reviews a "best practice" based on what universities convey on their web sites? I discovered during this short study that there is academic research on the issue of academic program and academic portfolio reviews (e.g., Dickeson, 2009- see good article in Inside Higher Education from 2016 "Prioritizing Anxiety"). However I took a fully empirical approach simply looking at what universities actually indicate they do regarding academic program or academic portfolio review. I truly did not know the answer. The simple hypothesis I tried to test is: A sizeable number of universities should indicate that they do academic portfolio reviews on their website if it is a "best practice". As you will see below, the results did not support this hypothesis. Methods This was a completely empirical analysis. I asked what universities say on their web sites regarding academic program review and academic portfolio review. I used Google to find out (admittedly this is not the best research tool, but it is a reasonable way to see what universities say on their web sites). I did two Google searches: 1) "Academic Program Review Processes" and 2) "Academic Portfolio Review Processes." I counted the academic campuses that came up on the first two pages in each search and listed them with their web links in the results. I did each search twice. Different universities came up in different orders in the academic program review search, but not in the academic portfolio search. Google searches are not the most sophisticated method, but they do have use in discovering how terms are used on university web sites. Results: The tabulation of the Google Search is shown in the bar chart on top of the blog. 69 universities reported they do academic program review (at least self study and external review). 4 universities and 1 university system reported they did academic portfolio reviews. Four consulting firms had marketing documents for academic portfolio reviews but none for academic program reviews. One consulting firm published a short document warning of the challenges in academic portfolio review. Academic Program Review So, What is Academic Program Review? When I Googled "Academic Program Review" the first definition that came up was from Iowa State: "The purpose of academic program review is to guide the development of academic programs on a continuous basis. Program review is a process that evaluates the status, effectiveness, and progress of academic programs and helps identify the future direction, needs, and priorities of those programs. As such, it is closely connected to strategic planning, resource allocation, and other decision-making at the program, department, college, and university levels. During the review process, external academic teams discuss departmental plans for the future including departmental goals and plans to achieve those goals. It goes on to say. "The goal of a program review should be the articulation of agreed-upon action plans for further development of the academic program. External academic review teams are invited to consider issues and challenges, and to consult with faculty and administration on future directions. The program review process should focus on improvements that can be made using resources that currently are available to the program. Consideration may also be given, however, to proposed program improvements and expansions that would require additional resources; in such cases, the need and priority for additional resources should be clearly specified." Review by Hanover Research A document titled, "Best Practices in Academic Review (Hanover Research)" was listed in the search (under the link American Sociological Association). The paper reviews a range of program review techniques. The report includes academic reviews with case studies from Howard University, Indiana State University, The University of Wisconsin-Eau Claire, Washington State University and The University of Cincinnati. Some of these schools have used processes based on Robert Dickeson’s work "Prioritizing Academic Programs and Services: Reallocating Resources to Achieve Strategic Balance." which seems to be the basis of the Academic Portfolio Review that was recommended by rpk Group who consulted with UNCG and the one UNCG adopted, but it also suggest some analysis that we UNCG did not include. I did not know of Dickeson's work prior to this search. This was Hanover Research report's general conclusion is: "Broadly speaking, an academic program review can be defined as an attempt to evaluate the performance of curricula, departments, faculty, and/or students at a degree-granting institution. While there is no universally-accepted model or methodology for conducting a program review, three primary elements are commonly employed:

UNCG's process is not fully consistent with what Hanover Research found. UNCG's self-study has components of the internal faculty review, but not a detailed self-study. There is no element of external review needed to assess quality and context. So, there is no comprehensive integration of the two studies. Tabulation of universities that use Academic Program Review. I tabulated the first two pages of my Google search for "academic review processes" I discovered that the following 69 schools all use a process with a self-study by faculty and an external review. UNCG was the only institution on this first two pages of my Google search that uses Academic Program Review as a synonym with Academic Portfolio Review. Here are the 69 schools and 1 consultant (other than UNCG) on the first two pages of my Google Search. All of the schools are linked to their site explaining academic program review. .

What is Academic Portfolio Review? UNCG's definition is in the 2nd paragraph of this piece (see here for UNCG's definition for students; see here for the UNCG's process. rpk Group seems to make clear in their recommendations to the University of Kansas Board of Regents that they recommend substituting their academic portfolio review framework for academic program review. They write, "Adopt the Academic Portfolio Review framework [rpk's framework is based on institutional ROI] as an annual assessment and modify the current program review process such that the framework is used to identify the programs that are needed for review as opposed to cycling each program through individually on an eight-year cycle. This recommendation maintains institutional control over program review but provides the Regents with a framework through which to manage the process and encourage more immediate action at the institution level." Emporia State University is an example of a university that implemented rpk's academic portfolio framework and made substantial cuts to academic programs and faculty. The results after one year show a negative ROI so far (12.5% decline in enrollment). The Educational Advisory Board (EAB) published a short document explaining five myths about what academic portfolio reviews can and can't do? The myths are below. Please see EAB's document for their description.

Universities that use Academic Portfolio Reviews I did a Google Search for "Academic Portfolio Review processes." The institutions listed below came up in the first two pages of my Google search that indicated that they conduct academic portfolio reviews. I repeated the search a second time. There were five universities listed as doing academic portfolio reviews (one was a Board of Regents) on the first two pages of search results, each time. There were many other institutions (like Rhode Island School for Design) who were in the search results because they have a process of helping students to create portfolios for review. There were four consulting firms promoting Academic Portfolio Reviews, with Hanover Research listed from three different links. There was on consulting firm warning of challenges with academic program reviews).The same number of Google results were examined. Institutions indicating that they conduct Academic Portfolio Reviews included:

Consultants/articles recommending or giving tips on academic portfolio reviews that came up in the search were:

The consulting firm EAB also had a short article "Five myths about academic program portfolio review" warning of significant challenges that institutions need to overcome in academic portfolio review. Conclusion: There are two large caveats to this study: (1) a Google search is not the best way to research best practices for anything, and (2) it may not pick up academic portfolio reviews done recently or many years ago. Nonetheless, the analysis shows two things: 1) Academic program reviews that use detailed self studies and external review seem to empirically be the best practice in academe since way more institutions show up in the first two pages of a Google search doing academic program reviews (with self-study and external review) than show in a Google search doing academic portfolio reviews; and (2) The Google search revealed that there were almost as many consulting firms (4) marketing academic portfolio reviews as their were academic institutions that listed them as a process they use (5). Those consulting firms were the only websites calling for academic portfolio reviews. There were none marketing academic program reviews. When I was provost, I was used to conducting program viability reviews every year to find underperforming programs (and many were cut). We used academic program reviews to assess quality against peers, to assess whether programs are underperforming, and for continuous improvement. The current use of Academic Portfolio reviews seems to be a short term tactic for universities to appear data-driven in making budget cuts and maybe reallocations, by comparing units within a campus based on metrics that I think are are hard to compare against each other for different disciplines. I think that if high quality academic program review is truly used and monitored, and that academic program reviews are considered with annual program viability audits, then universities should be able to make truly data-informed decisions in real time about existing programs, without using faculty time to try and apply metrics they may or may not understand to compare disciplines they are not familiar with. When budgets are challenged by enrollment declines, in my opinion, universities need to unleash the creativity of deans, department heads/chairs and faculty to modify or create new programs aimed at increasing enrollment. And, that may require some sort of budget incentives in incremental budget models. To unleash creativity, time becomes the key resource (which is why programs need incentives so they can effectively teach if modified or new programs generate enrollment increases). I think this is a better use of faculty time than trying to figure out metrics and how to apply them and compare apples and oranges.

0 Comments

Leave a Reply. |

|